Blog posts

Collected posts from the various blogs I’ve contributed to since 2002.

Collected posts from the various blogs I’ve contributed to since 2002.

I spoke to introduce and frame this inaugural event for the ODI and public digital Digital Public Finance Hub, presenting alongside Emily, Cathal and Marco to introduce some of the themes from our working papers on what we see as an emerging (and vital) paradigm for public financial management. Videos and other content from the event are available at ODI’s event website

Working with Cathal Long and Marco Cangiano at ODI, and Emily Middleton, Angie Kenny, and Joanne Esmyot at public digital I’ve been contributing to two papers laying out a new agenda for public financial management in the digital era. An emerging paradigm In the first paper in this series – entitled Digital public financial management: An emerging paradigm – we make the case that a paradigm shift is needed in how governments and development partners approach digital PFM. We outline an ‘emerging paradigm’ based on the latest thinking in PFM, digital change in government and digital technology. ...

Around the world there’s growing interest in Digital Public Infrastructure - the way that governments and societies can build strong digital foundations - and Digital Public Goods that help us share the best practices, standards and code that support it. Together these tools should help solve wicked societal problems like financial inclusion, public services, benefits, etc at scale and speed. Examples from India’s Aadhaar to the Open Banking standards that started in the UK to Brazil’s new payments systems have driven thinking about digital platforms and infrastructure to the top of the agenda of the G20 the UN and many other bodies. Viraj Tyagi of the eGov Foundation and James Stewart of Public Digital will discuss the developments they’re seeing, the importance of the agile community and how the community can connect with the work. ...

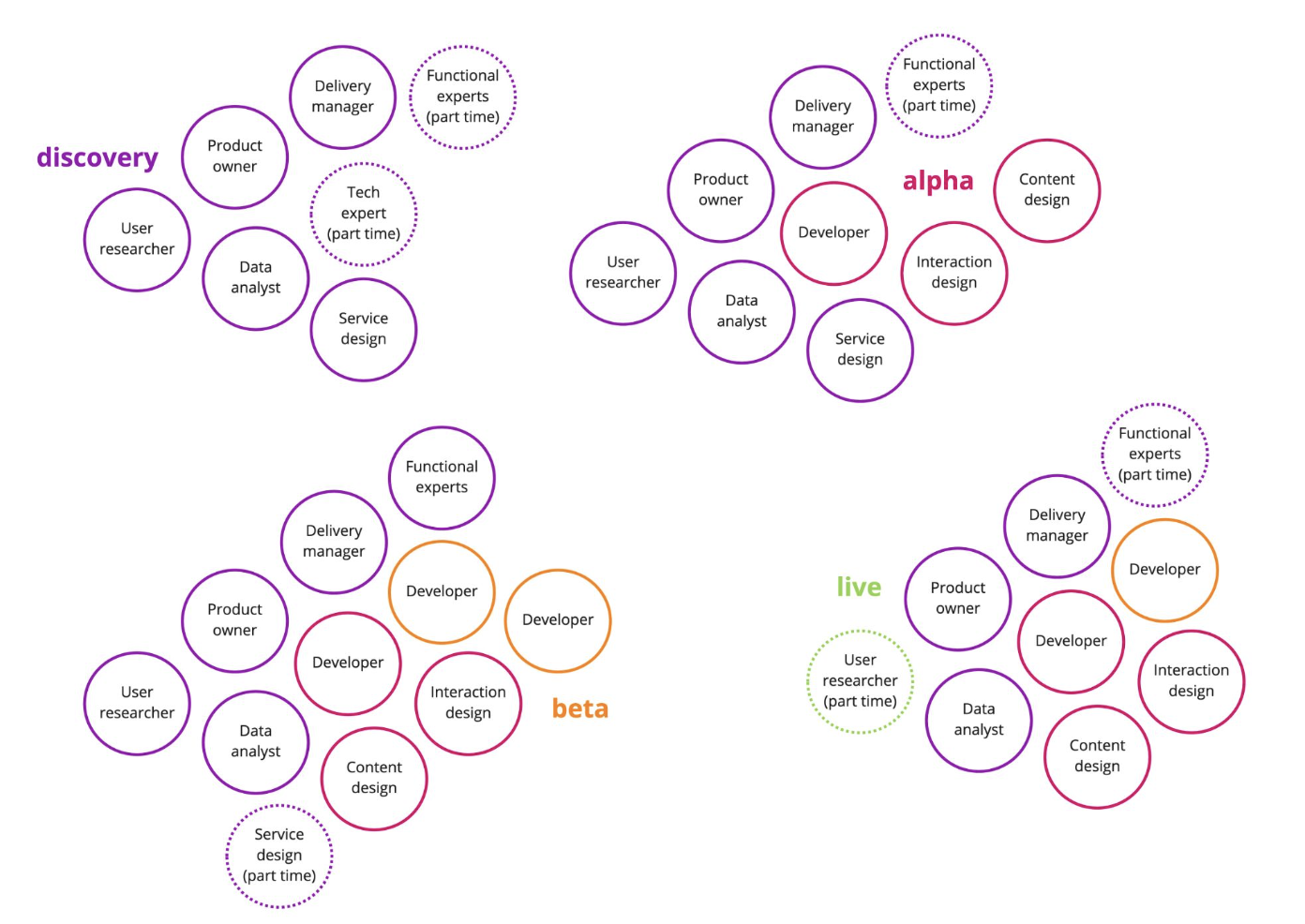

We’re growing used to talking about the importance of autonomous, high performing teams, and of bringing different technical disciplines together. But most organisations are more than their technology, and delivering great services requires organisations’ operations, policy, strategy and other functions to pull in the same direction. Drawing on experiences of bringing together teams across a broad range of disciplines in the UK and globally, James Stewart will look at why now is the time to really think multi-disciplinary and what some of the foundations are for much more inclusive digital work.) ...

This article first appeared on the Public Digital blog. One of our principles at Public Digital is starting small to go faster. This means delivering something quickly, even if this means on a smaller scale, so that you can identify its strengths and weaknesses at the earliest possible stage. It follows our approach that strategy is delivery, so implementation should begin early. Start with good enough, not perfect, and then get better before you scale up. ...

This article first appeared on the Public Digital blog. I was lucky to be able to attend the Code for America summit in Washington DC a couple of weeks ago. It was great to be able to gather with people in person after all this time, and reconnect with the community. The event felt diverse and exciting. That’s so important because after the past few years, everyone needs some fresh energy and to reconnect with a bigger vision. ...

I presented our paper on Fixing digital funding in government in a webinar for the Institute of Citizen Centric Services in Canada. I drew out parallels in other fields, but particularly focused on the transition that many digital teams are making from insurgents to establishment, with funding reform as the biggest bureaucratic hurdle.

This article first appeared on the Public Digital blog. It was co-written with Emily Middleton. This month James and Emily were at COP26 at Open UK’s Open Tech for Sustainability event. Here they write about five of their takeaways from the conference, and what’s next. Emily and James at COP26 We believe the climate crisis and inequality are the greatest challenges of our time. As a purpose-driven organisation, Public Digital needs to understand how our work – and the digital transformation agendas of our clients – can help and hinder addressing these challenges. ...

Emma Gawen and I joined FWD50’s Alistair Croll for a discussion of our report on creating conditions for success with open source in government. We touched on striking a balance between open and proprietary solutions; the leadership approaches needed for open source to thrive, and debunking some of the myths of “free” software. You can watch the session on the FWD50 website.

This article first appeared on the Public Digital blog. Governments organise themselves in the way they organise their money. The flow of cash defines accountability, behaviour, the shape of teams and the measures that matter. Yet a common feature of successful digital teams and services is that either by luck or design, they did not follow the institution’s typical funding processes. We don’t think that’s a coincidence. Digital funding for digital outcomes To successfully design, build and sustain great digital services you need to fund them properly. Too little process to manage funding, and you won’t focus on the right priorities and you’ll duplicate effort. Too much, and you’ll suffocate innovation and condemn your existing services to gradual decline and growing risk. ...